2013

Physical-to-Virtual Server Migration involves converting physical servers into virtual machines on platforms such as VMware or Hyper-V. The process includes preparing the servers, creating virtual copies, and conducting thorough testing to ensure seamless operation. This approach helps reduce hardware costs, improve efficiency, and simplify system management and scalability.

Job Description

Migrated physical servers to a virtualised VMware ESXi environment using converter tools and cold-cloning techniques to ensure minimal downtime and maintain data integrity, managing IBM BladeCenter and Storwize SAN infrastructure supporting both Windows and Linux platforms, and implemented high-availability UNIX clusters with IBM AIX and Oracle to enhance performance, scalability, and reliability of mission-critical applications, thereby optimising resource utilisation, reducing operational costs, and streamlining overall system management.

2013

Physical Document Tracking System involves using barcodes or RFID tags to monitor and record the movement and location of physical documents. This system helps prevent loss, enhances security, and simplifies the retrieval and management of important paperwork.

Job Description

Developed a comprehensive physical document tracking system incorporating biometrics for user authentication, active RFID for real-time location tracking, and ID barcodes and QR codes for rapid identification and verification. The system was implemented using Java and PHP, providing a robust and scalable architecture. Fully integrated into the organisation's existing workflow, it enabled seamless document check-in/check-out, enhanced traceability, and improved security. This implementation significantly reduced document loss, streamlined retrieval processes, and strengthened compliance with internal and regulatory

requirements.

2013

PC/Server Remote Health Monitoring Suite involves implementing a system that continuously monitors the performance and health of PCs and servers from a centralised or remote location. It tracks hardware metrics such as CPU and memory usage, disk health, temperature, and network performance, alongside software indicators including running processes, application errors, and security events. The suite typically employs specialised monitoring tools (for example, Zabbix, Nagios, or PRTG) to provide real-time alerts and detailed reports. This enables IT teams to promptly detect and resolve issues, prevent downtime, optimise resource utilisation, and maintain overall system reliability and security.

Job Description

Developed and maintained a tray application to monitor RAM, CPU, temperature, and disk health in real time. The application featured automated report generation via a scheduler or remote TCP commands, Integrated TeamViewer for streamlined remote support, and included a password-protected shutdown option to enhance security and control.

2013

Managed File Transfer (MFT) Solution involves securely automating and controlling the transfer of files between systems, users, and partners. It incorporates encryption, user authentication, tracking, and reporting to ensure data security, compliance, and reliability.

Job Description

Architected and implemented a Managed File Transfer (MFT) system using IBM WebSphere MQ FTE to enable secure and automated data synchronisation across remote sites in Sabah. This solution enhanced the data transfer reliability, improved operational efficiency, and ensured secure, centralised control over critical file exchanges.

2016

Thin Clients for Education involve using lightweight computer terminals that rely on a central server to perform the majority of processing tasks. In educational settings, this approach enables schools or training centres to provide multiple student workstations at a lower cost, with easier maintenance and centralised management. Students access applications, files, and resources hosted on a main server, ensuring consistent performance and simplifying software updates. This setup is ideal for computer labs, libraries, and shared learning spaces, promoting efficient resource use and reducing IT overhead.

Job Description

Co-designed and implemented a thin client infrastructure using NComputing devices in combination with Microsoft MultiPoint Server. This solution provided centralised computing power, allowing multiple student workstations to operate from a single server. The setup significantly reduced hardware and maintenance costs while ensuring consistent performance and simplified software management. By enabling easy access to educational applications and shared resources, the system created an efficient, scalable, and cost-effective computing environment tailored to the needs of classrooms and training labs.

2016

Document Digitisation & Archival involves converting physical documents into digital form and securely storing them in an organised system. This process improves accessibility, preserves records over the long term, reduces physical storage requirements, and supports easier search and retrieval.

Job Description

Built a Java-based system to scan, process, and archive documents as TIFF or PDF files using the SceyeX-A3 scanner. Integrated storage with a NAS for secure, centralised file management, and logged all activities in an Oracle database. Provided features for easy document retrieval, preview, and printing, improving the efficiency and accessibility of records.

2017

Central Network Monitoring System involves using a centralised platform to continuously track the health, performance, and security of all network devices and services. It helps detect issues early, optimise performance, and ensure reliable, secure network operations.

Job Description

Implemented a centralised monitoring and alert system covering over 100 servers, more than 50 storage devices, 30+ UPS units, and multiple environmental sensors. Enabled real-time performance tracking, automated alerts for critical issues, and improved overall infrastructure reliability and response times.

2018

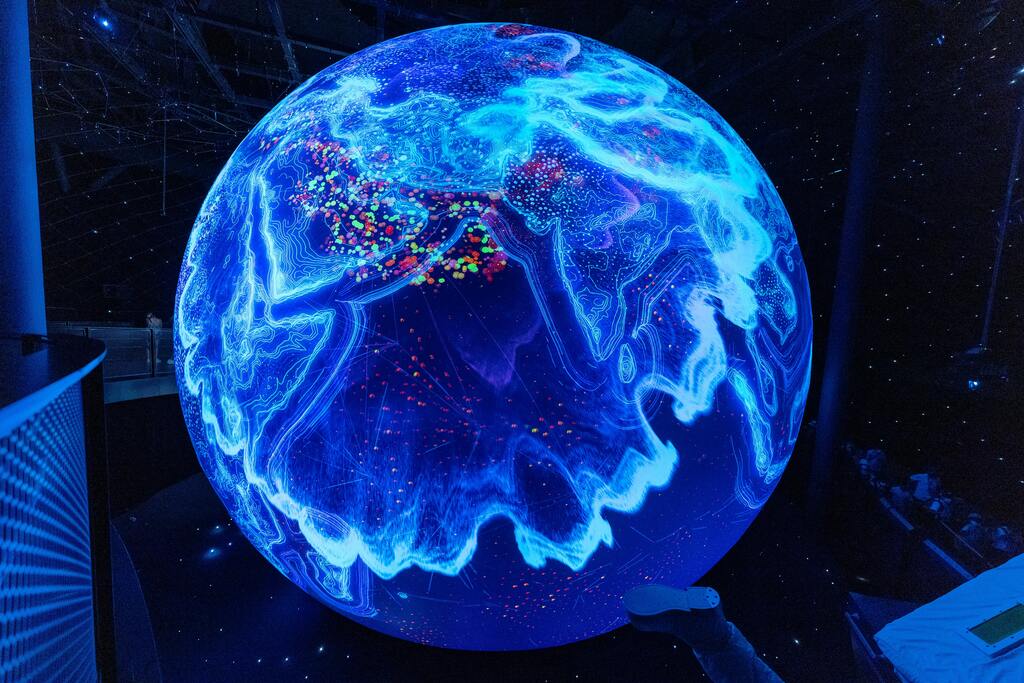

Geospatial Data Consolidation and Analytics involves combining data from multiple geographic sources, cleaning and standardising it, and analysing spatial patterns to support improved decision-making and planning.

Job Description

Integrated spatial and non-spatial data into a central data warehouse to enable business intelligence analysis and support data monetisation strategies. Published and shared data using SQL Spatial and WMS/WFS services, leveraging tools such as ESRI, GeoServer, Tableau, MSSQL Spatial, PostGIS and Oracle Spatial. This approach improved data accessibility,

enhanced decision-making, and created new opportunities for revenue generation.

Ongoing

Feature Manipulation Engine (FME) is a data integration tool used to convert, transform, and automate workflows for spatial and non-spatial data. It involves extracting data from multiple sources, transforming it into usable formats, and loading it into target systems to support analysis and decision-making.

Job Description

Used Safe Software's FME suite to integrate, manipulate, and transform business data from multiple sources, ensuring data consistency and accuracy. Enabled seamless downstream analytics in tools such as Tableau and supported integration with GIS platforms s including ArcGIS and GeoMedia. This streamlined data workflows, improved analytical capabilities, and enhanced overall data-driven decision-making.

Ongoing

Data Analytics using Tableau involves visualising and analysing data through interactive dashboards and reports. It helps uncover insights, identity trends, and support data-driven decision-making by turning complex data into clear, actionable visualisations.

Job Description

Performed data consolidation and business intelligence analytics using Tableau (versions 8 to current), integrating data from geographically distributed systems. Enabled clear, interactive visualisations and dashboards to support strategic decision-making and uncover key operational insights.

Ongoing

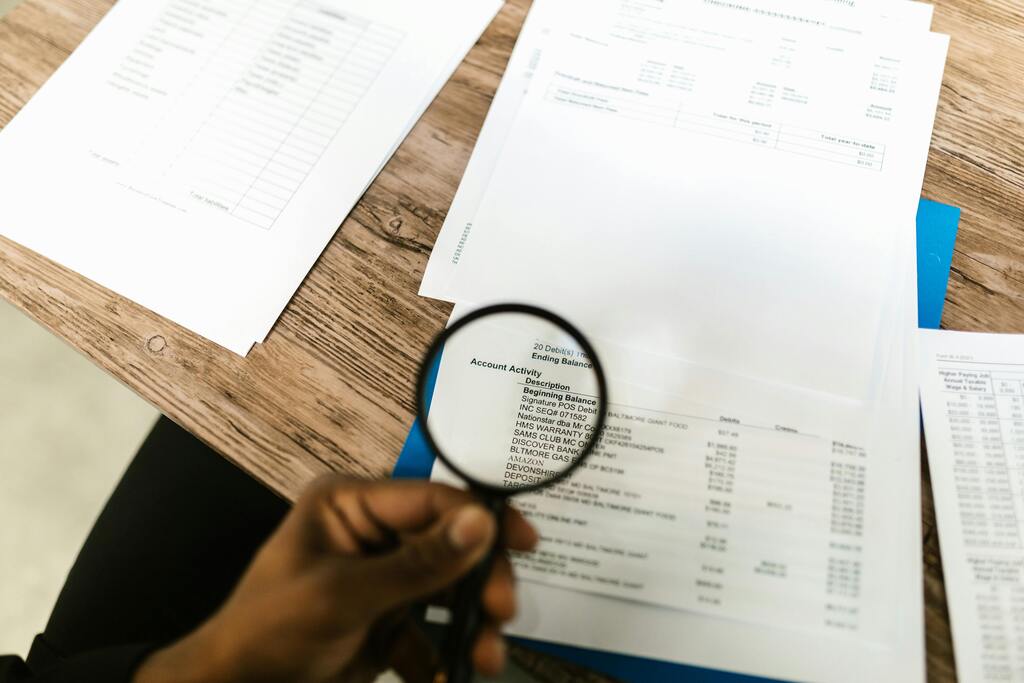

Document Verification using Aztech Code involves embedding secure, encrypted codes (similar to advanced QR codes) on documents to verify their authenticity. It includes generating unique codes, linking them to a secure database, and enabling fast, reliable validation via scanning, helping to prevent fraud and ensure document integrity.

Job Description

Created a document integrity verification system using encrypted Aztech Codes are printed on physical documents, enabling quick and secure authenticity checks. Developed a Java-based web service deployed on Apache Tomcat or GlassFish servers to allow seamless integration. The system has been successfully adopted by both government agencies and commercial organisations to prevent fraud and ensure document trustworthiness.